📢 News

Introduction

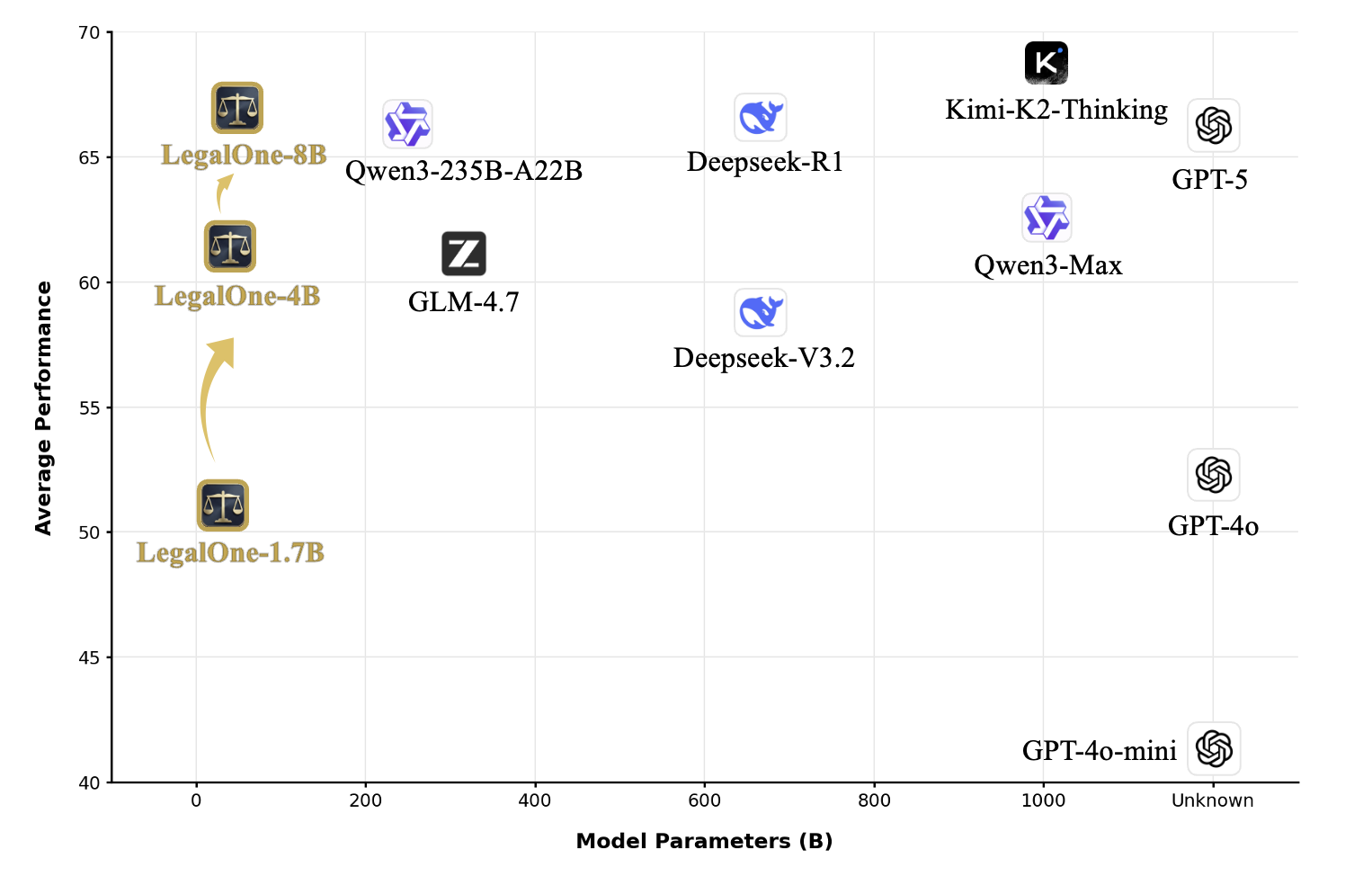

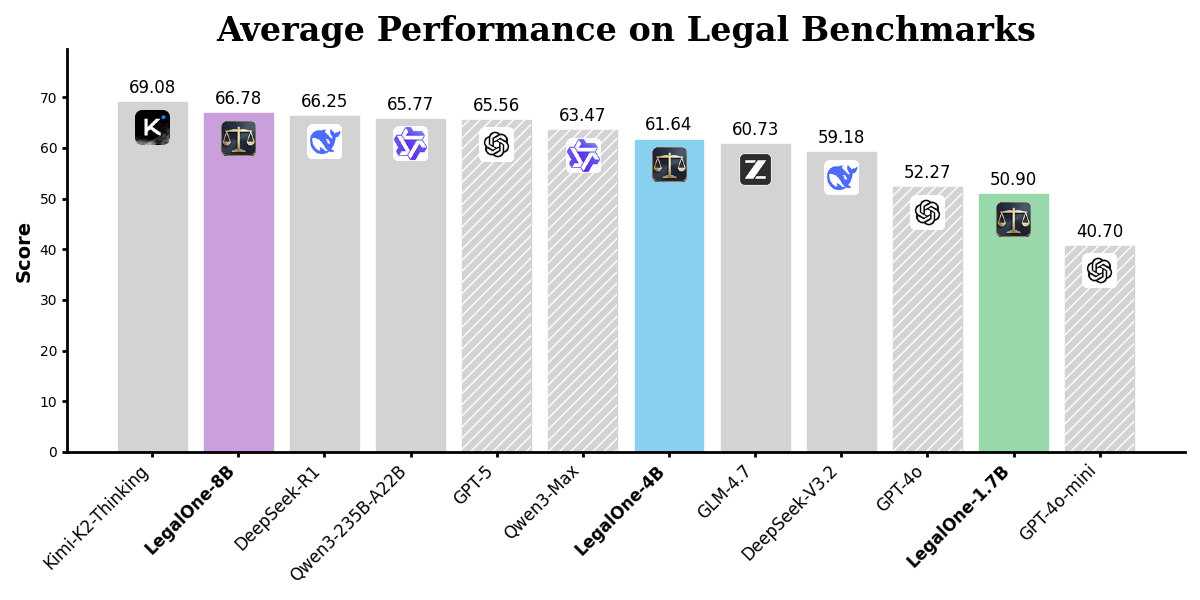

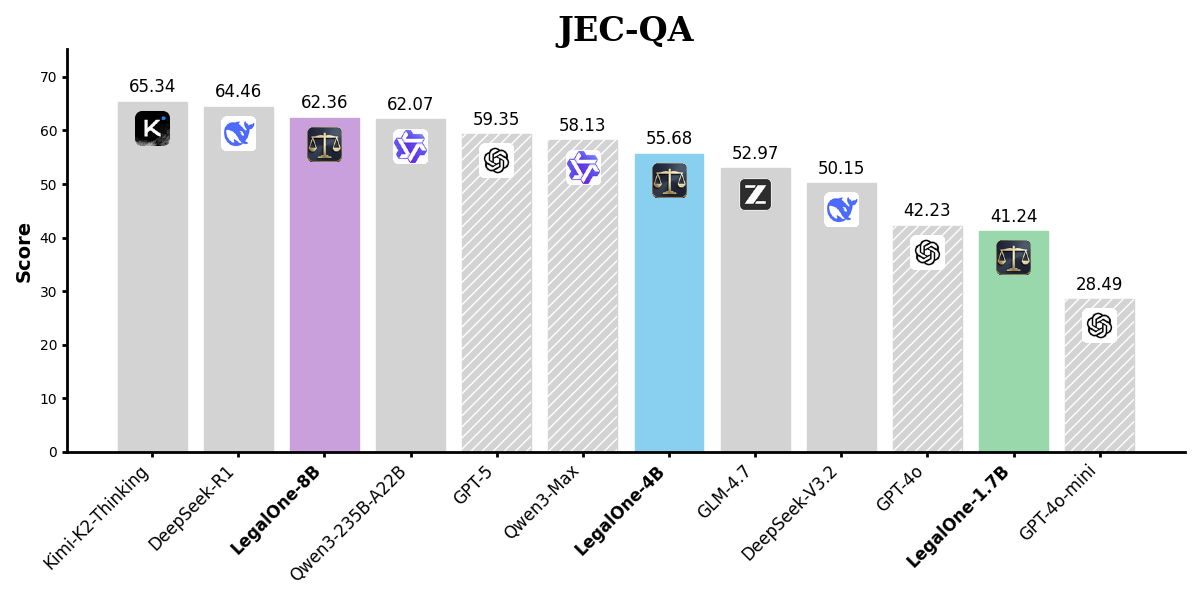

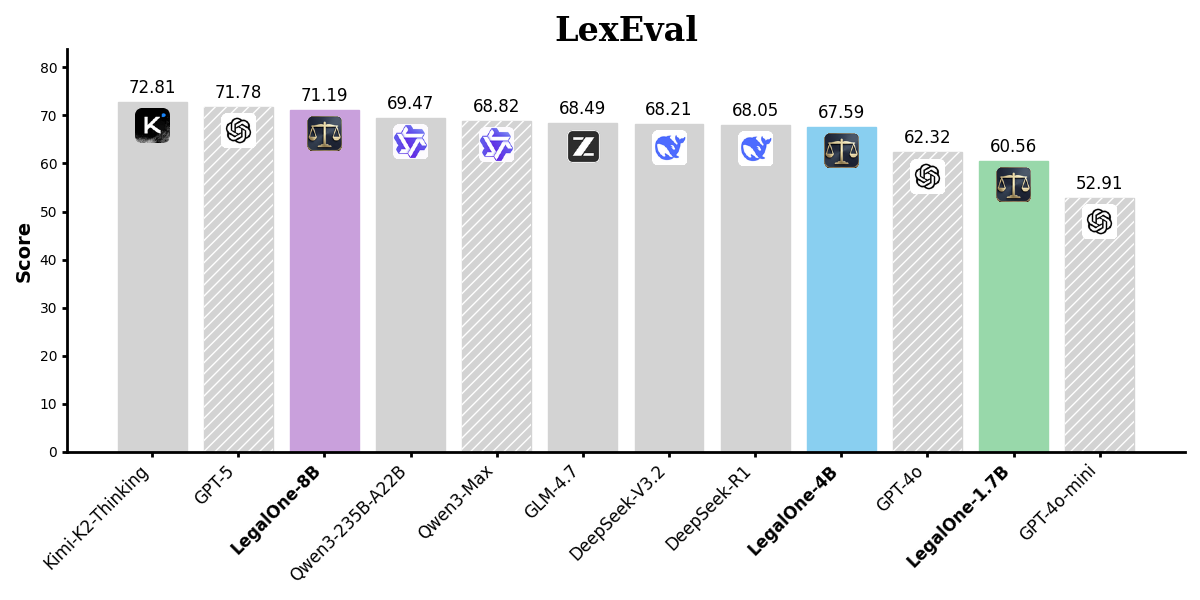

While Large Language Models (LLMs) have demonstrated impressive general capabilities, their direct application in the legal domain is often hindered by a lack of precise domain knowledge and complexity of performing rigorous multi-step judicial reasoning. To address this gap, we present LegalOne, a family of foundational models specifically tailored for the Chinese legal domain. LegalOne is developed through a comprehensive three-phase pipeline designed to master legal reasoning. Experimental results demonstrate that LegalOne achieves state-of-the-art performance across a wide range of legal tasks, surpassing general-purpose LLMs with vastly larger parameter counts through enhanced knowledge density and efficiency.

Pipeline

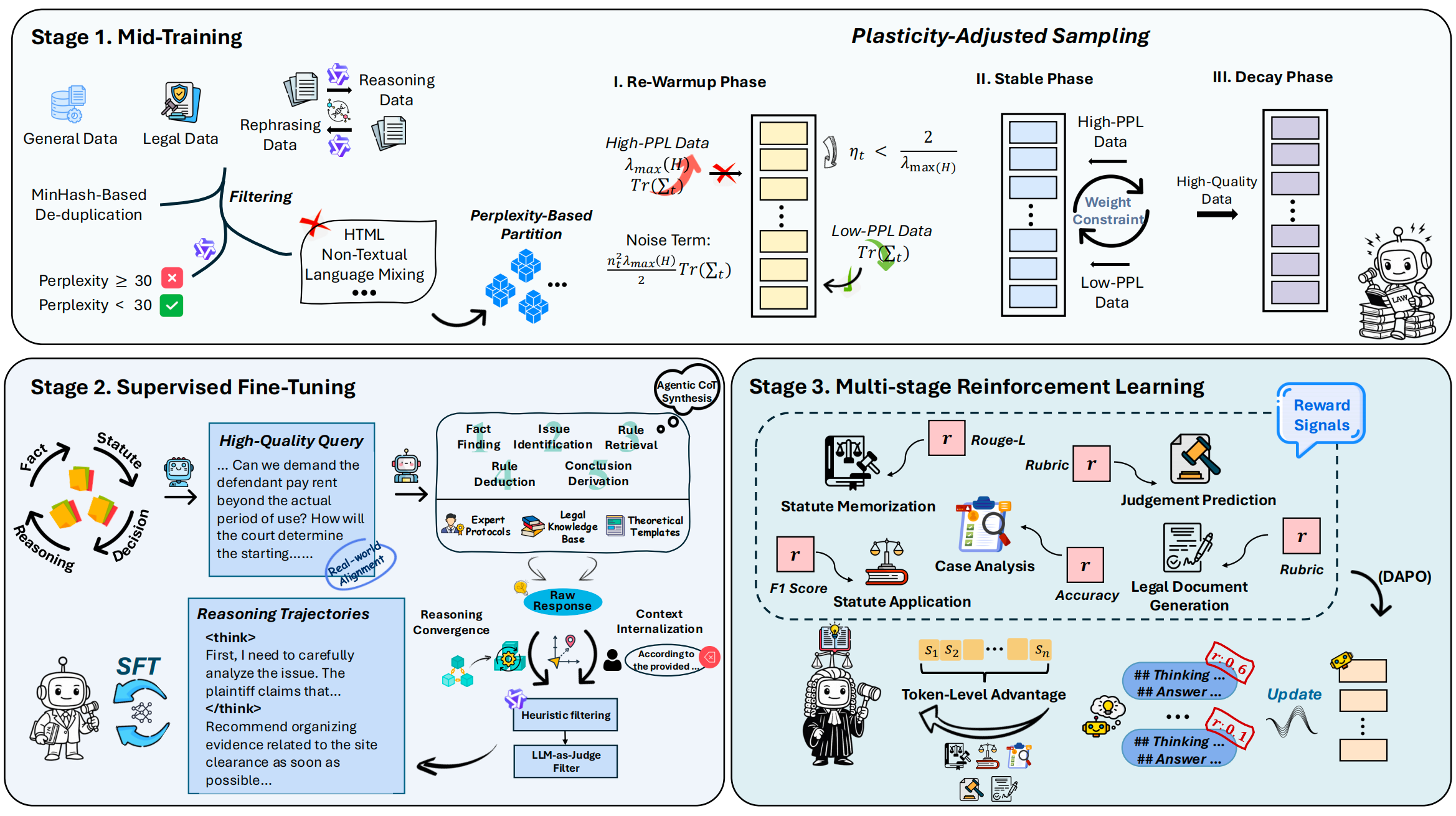

LegalOne is developed through a comprehensive three-phase pipeline designed to master legal reasoning. First, during mid-training phase, we propose Plasticity-Adjusted Sampling (PAS) to address the challenge of domain adaptation. This perplexity-based scheduler strikes a balance between the acquisition of new knowledge and the retention of original capabilities, effectively establishing a robust legal foundation. Second, during supervised fine-tuning, we employ Legal Agentic CoT Distillation (LEAD) to distill explicit reasoning from raw legal texts. Unlike naive distillation, LEAD utilizes an agentic workflow to convert complex judicial processes into structured reasoning trajectories, thereby enforcing factual grounding and logical rigor. Finally, we implement a Curriculum Reinforcement Learning (RL) strategy. Through a progressive reinforcement process spanning memorization, understanding, and reasoning, LegalOne evolves from simple pattern matching to autonomous and reliable legal reasoning.

Results

Click on any chart to enlarge

Case Study

Citation

@misc{li2026legalonefamilyfoundationmodels,

title={LegalOne: A Family of Foundation Models for Reliable Legal Reasoning},

author={Haitao Li and Yifan Chen and Shuo Miao and Qian Dong and Jia Chen and Yiran Hu and Junjie Chen and Minghao Qin and Qingyao Ai and Yiqun Liu and Cheng Luo and Quan Zhou and Ya Zhang and Jikun Hu},

year={2026},

eprint={2602.00642},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2602.00642},

}

LegalOne Paper

LegalOne Paper

LegalOne Repo

LegalOne Repo